What is PostgreSQL®?

Join us for a browse about the ins and outs of PostgreSQL® on this page of information and resources.

This post is your all-purpose PostgreSQL introduction. We'll cover a lot of questions:

- What is a PostgreSQL database?

- What does PostgreSQL do, and what's special about it?

- How does PostgreSQL work?

- How does PostgreSQL fit into a data architecture?

In this post:

- What is PostgreSQL and why you should care

- What does PostgreSQL do?

- PostgreSQL use cases and users

- PostgreSQL features

- How PostgreSQL does it

- Aiven for PostgreSQL

- Getting started with PostgreSQL at Aiven

- Further reading

What is PostgreSQL and why you should use it

Definition and uses

PostgreSQL, also known as Postgres, is a flexible open-source object relational database management system. PostgreSQL can handle a huge variety of use cases, from single machines to data warehouses to web services with many concurrent users. PostgreSQL uses and extends SQL (hence the name), and is broadly extensible to a range of use cases beyond mere transactional data.

PostgreSQL is strongly supported by its global development group of companies and individual contributors.

For definitions of the most common SQL terms and concepts, which also apply to PostgreSQL, take a look at (Postgre)SQL concepts and terms

What does PostgreSQL do?

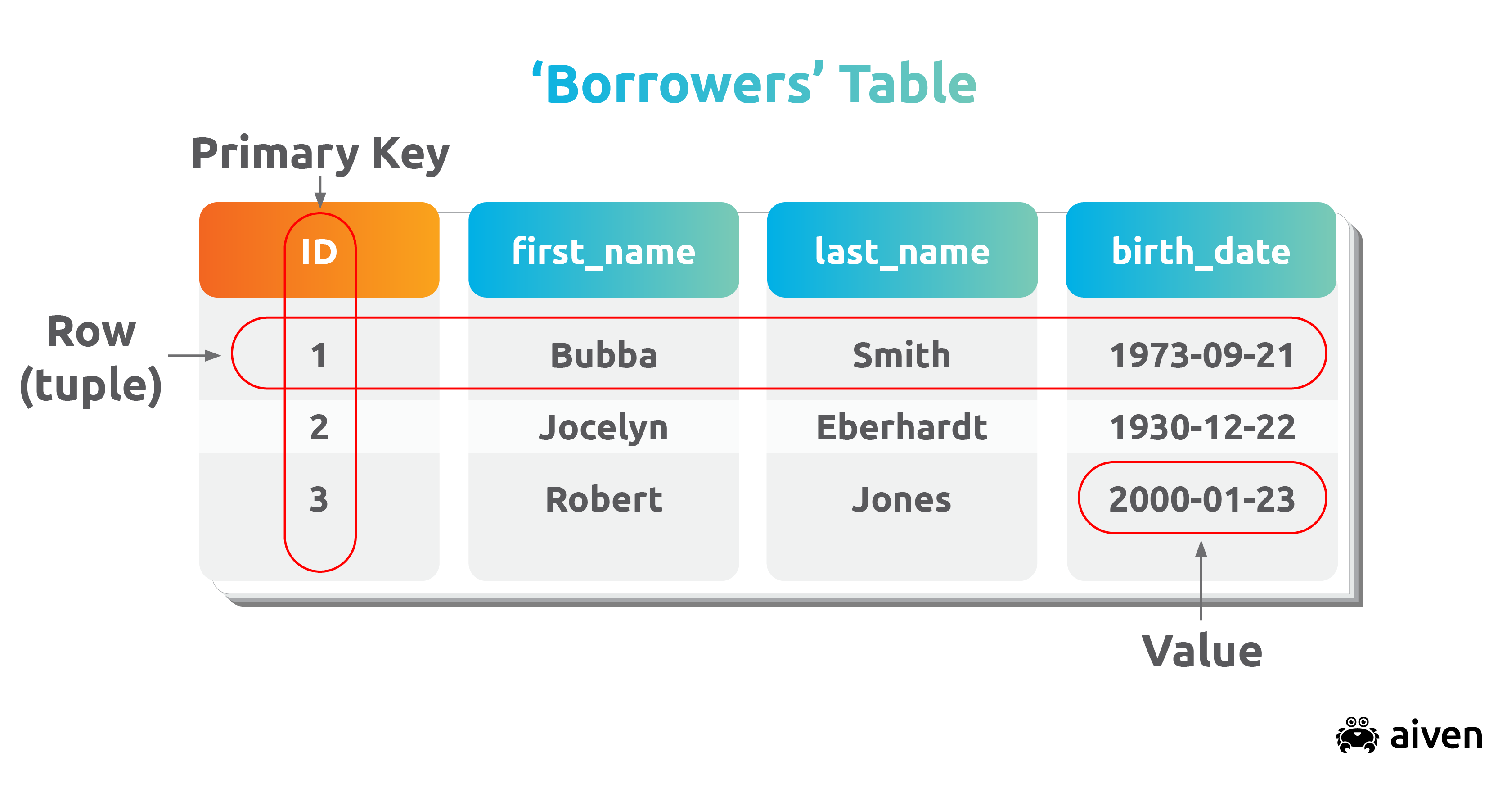

As a relational database, PostgreSQL stores data in tables (called relations) containing the tuples representing entities (such as documents and people) and relationships (such as authorship). Relations hold fixed-type attributes representing entity properties (such as a title) along with a primary key. Attribute types can be either atomic (such as integer, floating point, or boolean) or structured (such as an array, nested JSON, or a procedure).

PostgreSQL supports transactions with ACID properties. This means transactions must support four attributes:

Atomicity — transactions are considered complete units; a transaction can either completely succeed or completely fail - in the case of failure, the database state is left unchanged.

Consistency — a database, between transactions, can only exist in a valid state; all data written to the database must adhere to extant constraints, triggers, cascades and related combinations.

Isolation, — a function of concurrency control, ensures that data is not corrupted by illegal or concurrent transactions — as transactions are treated as if they happened sequentially.

Durability — ensures that a transaction remains committed even when the system fails — typically, completed transactions are recorded, for example, in a write-ahead log.

PostgreSQL use cases and users

According to the db-engines ranking, PostgreSQL is the fourth most popular database management system (and most popular open source DBMS).

Today in addition to enterprise users, a number of 3rd party vendors, consultancies and independent groups derive products from, consult, support, and offer auxiliary enhancements for PostgreSQL. There are also integrations with artificial intelligence (AI) and machine learning (ML), many cloud versions, hardware-optimized versions, and so forth. The Use cases for PostgreSQL on our blog has more to say.

By far the biggest industry sector using PostgreSQL is the computer software industry, followed by information technology and services. Other industries include hospitals and health care, higher education, financial services, telecommunication, education, marketing and advertising. Government agencies, including the FBI, are also users.

PostgreSQL features

For more information about the types of features that PostgreSQL supports, there's a great breakdown at PostgreSQL , so we’ll look at just a few of the main ones here.

Automatically updateable views

In addition to SQL support, PostgreSQL offers automatically updatable views. This means that a view resulting from a query will automatically update when a transaction updates the data serving that view.

Materialized views

A materialized view is a data object that contains a given query’s results. You can query a materialized view just as you would a database table directly. They can also be refreshed on schedule, and must be because they are essentially a snapshot of the data at a certain point in time.

Triggers

Triggers execute code in response to transactional or other changes to a table or view. When an item is updated in the inventory, you might want to automatically record the update time and the username performing the operation in dedicated columns for tracking purposes.

Foreign keys

(See also Primary key Foreign keys exist to ensure valid references between the child and parent tables; for example, every row in the orders table needs to have a valid customer reference associated with the order itself.

Stored procedures

Stored procedures are maintained in the data dictionary of a database, and may contain several combined SQL procedures each. They provide applications access to commonly-used data validation, data manipulation, access control, or other methods. Stored procedures can be defined by users and run when needed.

Pub/sub logical replication

PostgreSQL offers a means for publish/subscribe logical replication. Essentially, a PostgreSQL instance can act as an event cache between applications and event consumers.

(You could check out this Future PostgreSQL blog article where we cover new improvements to the replication protocol.)

Change data capture

There is also a means for change data capture, meaning that prior database changes can be rewound and replayed, a capability essential for disaster recovery.

Text search

PostgreSQL can support the sort of SQL queries that look for column values based on some textual contents combined with wildcards, for example:

Loading code...

This could return records containing book titles like <u>Zen and the Art of Motorcycle Maintenance</u> and <u>Zen Flesh, Zen Bones</u>. Those wildcards could be positioned also at the start of the search string. The same query, but with the search string as %Zen% could return the results above -- in addition to titles where ‘Zen’ isn’t the initial word in the string, like <u>The Three Pillars of Zen</u> and <u>The Essential Teachings of Zen Master Hakuin</u>.

In addition to text search, PostgreSQL supports a tsvector (a text-search) data type.

The function to_tsvector lets you create a list of tokenize a string to derive a tsvector. to_tsquery is used to query the tsvector for occurrences of certain words or phrases.

Recursive queries

PostgreSQL 14 methods to generate recursive queries. It also has new SEARCH and CYCLE features that are very nifty for recursive queries.

json language support

PostgreSQL enjoys excellent json language support. You can define table columns as json or jsonb data types and use json functions to parse and manipulate the content.

Concurrency

PostgreSQL is one of the best-known examples of an open source database which supports concurrent ACID transactions. To achieve this, PostgreSQL may use multiple locks to serialize database changes. When concurrent changes are made, the first transaction may proceed (as a lock is invoked); the second pauses until the first commits or aborts.

Here is an example. Imagine opening two terminal windows. In a psql session, you’d try:

Loading code...

In another psql session, you’d try to run:

Loading code...

Note: Nothing happens until the first transaction is complete.

Reliability and disaster recovery

Before writing a full page image to disk, PostgreSQL periodically writes to a write-ahead log (WAL). This enables PostgreSQL to recover from crashes by replaying the writes in the log.

The full_page_writes parameter in postgresql.conf can be toggled off if there is anything in the system preventing partial writes.

Here's a further read on the topic on the Aiven blog: Data and disaster recovery.

Individual records in WALs are checksummed by a CRC-32 check to validate correctness of record contents (upon restore operations), although there are a few items that bypass the checks, including data pages, and internal data structures.

Security

PostgreSQL has a lot of ways to handle all kinds of security issues. For example, client connections are allowed only via local Unix socket by default (so, not via TCP/IP sockets) unless you use the -i switch to start the server backend. Also, you can blacklist client connections with the pg_hba.conf file in PG_DATA.

Superuser rights to objects are one option to be able to perform a lot of different operations in PostgreSQL. For example, only the PostgreSQL superuser account can read the DB files themselves. By granting these privileges you can copy table data, create publications that publish data automatically, or create subscriptions. They are also important privileges for logical replication.

In fact, each PostgreSQL user takes a username and password. You can assign users to groups, and then assign or restrict access to tables — or even rows — based on these group privileges.

PostgreSQL also offers a range of encryption options like encryption for specific columns, data partitions, across a network, etc.

Server spoofing prevention works by creating a symbolic link to a relocated vulnerable socket file.

Extensibility

PostgreSQL is catalog-driven: data dictionaries — system catalogs — hold information about the database itself, cells, columns, data types, functions, access methods, etc. These catalogs are tables in their own-right: user-queryable and modifiable.

As users can modify databases directly, as well as add dynamically-loadable code changes or user-defined functions (UDFs), many choose PostgreSQL for rapid prototyping applications and data structures.

Extensibility is critical for many organizations with needs specific to a given industry or line of business. When traditional database systems fall short and business users are not themselves database experts, modifying the database may not interrupt availablity nor require core database engine modifications.

In fact, users want seamless solutions. With PostgreSQL you can seamlessly create your own data types, functions, aggregates, operators, operator classes (for index access), data wrappers, and so on, providing the tools and add-ons for domain-specific solutions.

Sometimes extensions become complete products in their own right. One concrete example: PostGIS, which has been modified to support location queries using geographic coordinates. Additionally, [Timescale] is PostgreSQL optimized for time series data.

Here are some ways to use PostgreSQL together with other tools:

- Quickly develop REST APIs with PostgREST

- How to stand up multiregion PostgreSQL with Pulumi

- Secure your database access with HashiCorp Vault

Tool support

Developers these days can enjoy a number of tools to interact with the database that go way beyond psql. pgAdmin supports PostgreSQL across the board and is a long-time favorite of DBAs and developers; we have Aiven-specific setup instructions available. Alternatively, you can connect via psql, another helpful terminal-based front-end tool.

DataGrip, from JetBrains, is a popular integrated development environment (IDE) that works cross-platform and with a range of databases. usql is a universal command-line interface that works with many databases natively. Finally, OmniDB is a complete collaborative workspace for PostgreSQL and other databases.

In addition to core PostgreSQL itself, Aiven developers have contributed to a number of PostgreSQL extensions, including pghoard (for backup and restore), pglookout (for replication monitoring and as a failover daemon), and a range of connectors. Here's a full list of the extensions that work with Aiven versions.

For visualizing data, you can pick the open source analytics tool Grafana, for example. We've got instructions how to integrate PostgreSQL and Grafana with the help of Aiven platform. Grafana is also a great tool to monitor your PostgreSQL metrics.

Alternatively, check out how to monitor the metrics with pgwatch2.

In terms of orchestration, you can manage your PostgreSQL database with Terraform.

Delegating management of your PostgreSQL database

Above we covered many exciting features of how PostreSQL helps you to be in charge of data operations. However, this is not the whole story of managing your database. Working with any database involves a lot of routine operations to achieve high availability, steady performance and bulletproof database security.

As your database and your applications grow, these routine operations will consume significant time and involve substantial cost of accidental errors. That's why at some point of time you might consider using professional support to manage your PostgreSQL database and use external help to perform database administration tasks.

Among these critical error-prone routine operations are

- performing regular backups and restoring data when needed;

- staying on top of the latest security updates and patches, including a straightforward way to perform major version upgrade and to control failover procedures;

- maintaining very large numbers of connections to a database with the help of connection pooling.

Relying on managed services also gives advantages in using and managing extensions, where the recommended extensions are supported and maintained in the same way as PostgreSQL database.

Delegating management of your PostgreSQL database is not an easy choice. However, it can be a two-way door decision. At the end of the day, you can always go back at managing your servers later on, if you stick to open source solutions.

...and so much more

The PostgreSQL home page lists tons of other features - supported data types, more in-depth data integrity features, features supporting concurrency, disaster recovery features, security features, extensibility and text search, including international character support.

How PostgreSQL does it

Core PostgreSQL mandates that data be fully-structured, requiring that it adheres to a specific schema; records added to a database that do not comply will be rejected.

NOTE: PostgreSQL has since evolved to also support JSON/JSONB, XML and other common formats for data ingress/egress.

As an open-source database management system, PostgreSQL users can continually evolve their databases, while switching languages and without need to recompile. While SQL conformance is ongoing, new features are being constantly added. Let’s look at how objects are created and manipulated in PostgreSQL.

As SQL is quite expansive, we’ll only touch on some basics here.

DDL - data definition language

Data definition language (DDL) is used to define data structures, and includes the following commands:

- Data type declaration

CREATE- creates a new database, table, index or procedure.ALTER- modifies an existing database object.DROP- deletes an existing database, table, index or procedure.TRUNCATE TABLE- empties a table for reuse.

For example:

Loading code...

DML - data manipulation language

Data manipulation language is used to work with tables and their contents as in:

INSERT- inserts new rows in a table.UPDATE- updates existing rows in the table.DELETE- deletes rows from a table.SELECT- the main method for querying data from the database.

For example:

Loading code...

Compact syntax for bulk insert

There is an easier way to insert multiple rows at once, using a single command:

Loading code...

In brief: DCL and TCL

DCL - data control language - deals with the permissions and other controls governing access to the database system. It consists of the following statements:

GRANT- grants access privileges.REVOKE- revokes access privileges.

TCL - transaction control language - has to do with the transactions in a database, and consists of the following statements:

COMMIT- commits a transaction.ROLLBACK- in the event of an error, rolls back a transaction.SAVEPOINT- sets a save point in a transaction.SET_TRANSACTION- defines transaction characteristics.

Querying data

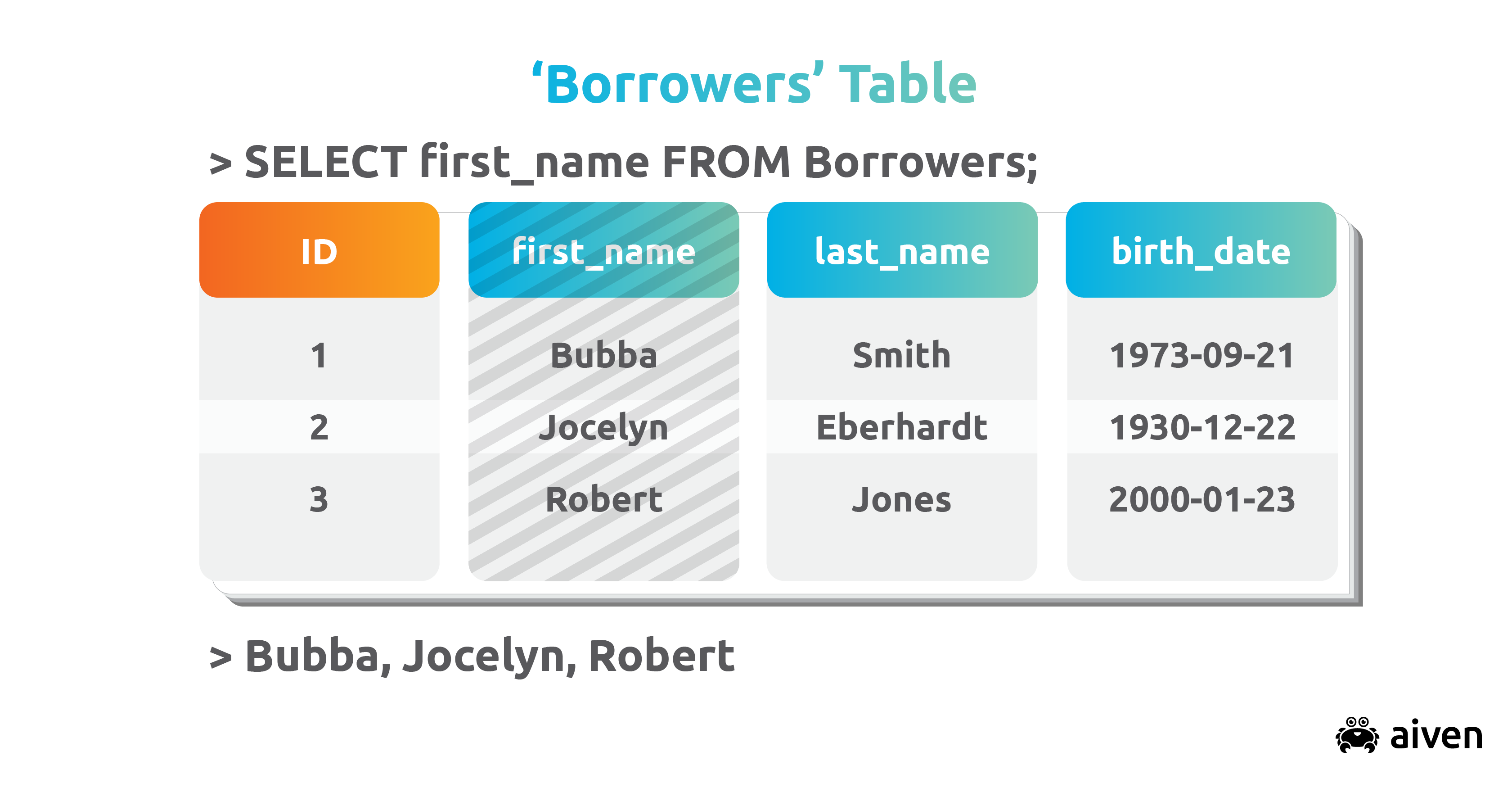

Most querying on PostgreSQL (as in SQL itself) happens via the SELECT statement, as in the following examples:

Loading code...

Queries can be refined in a variety of ways, for example limiting results to a condition:

Loading code...

Limiting results to a specific results count:

Loading code...

Querying only certain fields:

Loading code...

Sorting query results by certain fields:

Loading code...

and so on.

Aiven for PostgreSQL

Aiven for PostgreSQL is our managed and hosted solution for any provider, offering high-availability with automatic updates and backups. Aiven supports various extensions, such as PostGIS and TimescaleDB and provides publish/subscribe logical replication.

Aiven for PostgreSQL is just one part of the data platform we provide. You can integrate it as a part of a system that also includes things like Aiven for Apache Kafka, OpenSearch or Redis.

If you're unsure which cloud to choose, take a look at the latest benchmarks across GCP, AWS and Azure!

Getting started with Aiven for PostgreSQL

To begin with, you could do worse than read the documentation.

Aiven for PostgreSQL is easy to set up. Sign up for 30-days free trial at Aiven Console.

Further reading

Also, there's quite a lot on the Aiven blog about PostgreSQL - check it out for some cool things to use PostgreSQL for.

Stay updated with Aiven

Subscribe for the latest news and insights on open source, Aiven offerings, and more.