The pursuit of happiness with Python and PostgreSQL®

The world and the data in it are seldom perfect. Read to find out how to clean up the data in your PostgreSQL® database so that it's usable for analysis.

Francesco Tisiot

|RSS FeedHead of Developer Experience at Aiven

Creating information is just like any creative process. It starts with finding the materials (in this case, datasources) and continues with cleaning, joining and wrangling datasets. By the time you're finished you've built something beautiful and gained new insights to share - and then you start all over again.

One of the most widely-used languages in the creation of information is Python, loved by data scientists, engineers and analysts alike for its great ecosystem of existing libraries for data wrangling.

This blog post explores three such libraries: pandas, ddlgenerator and psycopg2. These each enable us to clean a dataset and push it to a PostgreSQL database where the data can later be queried and exposed to a huge variety of company figures.

Today, the objective is simple: we will explore a happiness dataset and try to find out where in the world we should move to have joyful life!

Here's the high-level process:

- Get the dataset

- Clean the data

- Create a PostgreSQL instance

- Push the data to PostgreSQL

- Query the Data in PostgreSQL

0. Get the dataset

As mentioned before, begin by finding a dataset. Kaggle is a website widely used in the data science community, providing datasets used for challenges, competitions or learning. They have a nice dataset about World Happiness which only requires a login to be downloaded. It contains 5 CSV files, one per year, listing the happiness ranking of various countries together with some other indicators.

Let's create a folder named happiness and a subfolder named data for storing the files:

Loading code...

Next, download the data from Kaggle and place the 5 CSVs in the happiness/data folder.

1. Clean the data

As happens frequently, source datasets are not always tidy and clean. This is also true for our happiness dataset. When browsing the files we can see that 2015.csv and 2016.csv share the same format of column names and data. But the 2017.csv has the Region column missing, some others named and ordered differently (Generosity and Trust are swapped). The same applies to the files 2018.csv and 2019.csv where we can't find the confidence intervals anymore. Moreover, the year is only contained in the file name, and not in a column.

Before starting our cleaning efforts, it's good practice to create a separate virtual environment every time we start a new Python project. This ensures our default Python environment is not overloaded with possibly conflicting libraries. We can create a virtual environment and enable it like this:

Loading code...

The files need some work in order to produce a consistent view of the data, so let's put on our Python superhero mask. Start by installing the pandas library, which will help us with data manipulation and analysis, by executing the following code in your terminal:

Loading code...

Now we can create a file named prepare.py in our happiness folder and start including some Python code in it. We'll start by importing the library installed earlier, together with os and glob which should be installed by default and enable the interaction with the operative system and pathname pattern expansion respectively.

Loading code...

Next, define the column names that we're going to use in our target DataFrame named all_years_df to store the dataset once cleaned. Add the following lines to the prepare.py file:

Loading code...

Now it's time to bring order to chaos and parse all the CSV files in the data folder. Add the following code to the prepare.py file:

Loading code...

The glob function returns the list of files in the happiness/data folder, that we loop over. For each file, we read the filename with os.path.basename, split the name on the dot (.) and take the first part, extracting only the year information. The file_content line uses pandas' read_csv function to load the file data and populates the new column Year (using the assign function) with the last two digits of the year (int(file_year)-2000).

Next we call the find_columns function, which we'll define soon, to select the correct columns from the source file depending on the year.

After that, we consolidate the column names in the uniformed_columns DataFrame containing only one year of data, and then append it to the all_years_df DataFrame that will contain the whole dataset at the end of the process. Lastly we store the end result in a CSV file named results.csv in the happiness folder.

Picking the correct columns

The missing part is the find_columns function definition where we consolidate the column names and order. As mentioned above, depending on the year, we need to select the correct columns from the source file. Add the following code to the prepare.py file, just after the import statements, to perform the trick on various years:

Loading code...

The function picks the correct column for each year and returns the resulting DataFrame.

It's now time to check that the pipeline is working. With the terminal positioned on the happiness folder let's execute the following:

Loading code...

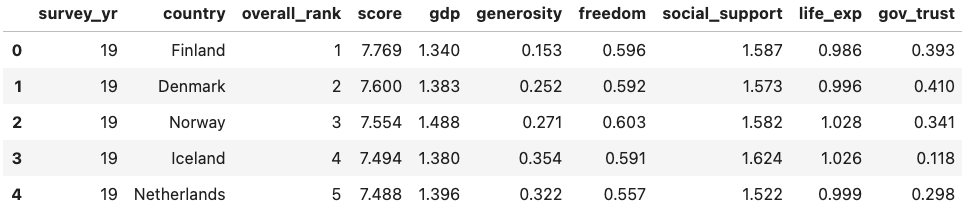

Now check the file results.csv in the happiness folder to verify that the code worked. The results should look like this:

2. Create a PostgreSQL instance

The second step is to store our data in a PostgreSQL table. But before storing it, we'll need a PG Database; let's create one with Aiven CLI in our terminal

Loading code...

The above command creates a PostgreSQL instance named demo-pg on google-europe-west3 using a hobbyist plan. You can review all PostgreSQL plans on our pricing page. To connect to it, we'll need the service uri containing information like host, port, and the default avnadmin user's password. We can retrieve it with:

Loading code...

Now we have a couple of minutes to relax while we wait for the instance to be ready after the following call in the terminal

Loading code...

3. Push the data to PostgreSQL

Once the service is running, go back to Python and prepare the data push.

We'll use the ddlgenerator library to automatically generate the SQL statement to create the PostgreSQL table, and psycopg2 to interact with the database. We can install both with the following line in the terminal:

Loading code...

Now create a new push.py file, add the import, and connect to the database statements. Replace the <SERVICE_URI_OUTPUT> parameter in the following code with the output of the avn service get command above:

Loading code...

The connection is established. We can now create the table and push the data. ddlgenerator provides a nice functionality to create both table DDLs and insert statements from a CSV file. We can append the following code to the push.py file:

Loading code...

After importing the Table class from ddlgenerator, the code creates an instance parsing the results.csv file, with a target table name of HAPPINESS. The last line creates the CREATE table definition SQL for PostgreSQL, including the insert statements for each line (inserts=True). If we print the SQL statement it should look something like the following:

Loading code...

We can now execute the sql statement against the database by adding the following code to the push.py file

Loading code...

We created a cursor, executed the sql statement, committed the change to the database and closed both cursor and connection. It's now time to run the code. With the same terminal on the happiness folder, run the following:

Loading code...

Congrats, the data is now loaded in PostgreSQL.

4. Query the data in PostgreSQL

Let's verify that the data is correctly populated in the PostgreSQL happiness table. Create a new Python file query.py with the following content (as before, edit the connection service uri):

Loading code...

As per the push.py file, we are creating a connection to PostgreSQL, then executing a query to check which countries were in the top three positions over the various years. The result should be similar to:

Loading code...

It looks like Northern European countries are a great place to live! Take particular notice of how Aiven's home country, Finland, has been at the top of the table in the last two years.

The data, now available in a PostgreSQL relational table, can be queried and exposed by all major analytics and reporting tools, making it accessible to a wider audience.

Wrapping up

Finding the right dataset is just the beginning: cleaning, wrangling and publishing the data creates information to help people in their decision-making. Python is a great fit in this area and widely adopted in the data community. The pandas, ddlgenerator and psycopg2 libraries mentioned in the blog enable an easy data handling and push to PostgreSQL. This makes for a solid base for data discovery or corporate reporting.

Some more info:

- Aiven for PostgreSQL: all the info about Aiven's offering

- Aiven PostgreSQL supported extensions: browse the extensions we support

- pandas, ddlgenerator and psycopg2: documentation about the libraries used

--

Not using Aiven services yet? Sign up now for your free trial at https://console.aiven.io/signup!

In the meantime, make sure you follow our changelog and blog RSS feeds or our LinkedIn and Twitter accounts to stay up-to-date with product and feature-related news.

Further reading

Stay updated with Aiven

Subscribe for the latest news and insights on open source, Aiven offerings, and more.