Aiven for Apache Kafka®

One cluster,

infinite possibilities

The future of Kafka is here: a single, cloud-native engine that instantly upgrades your cluster to run both sub-100 ms streams and 80% cheaper batch topics, eliminating silos and cluster sprawl without a migration.

99.99% SLA

50+ connectors

Runs on any cloud

Start for free

Includes $300 in free credits

Trusted by developers at

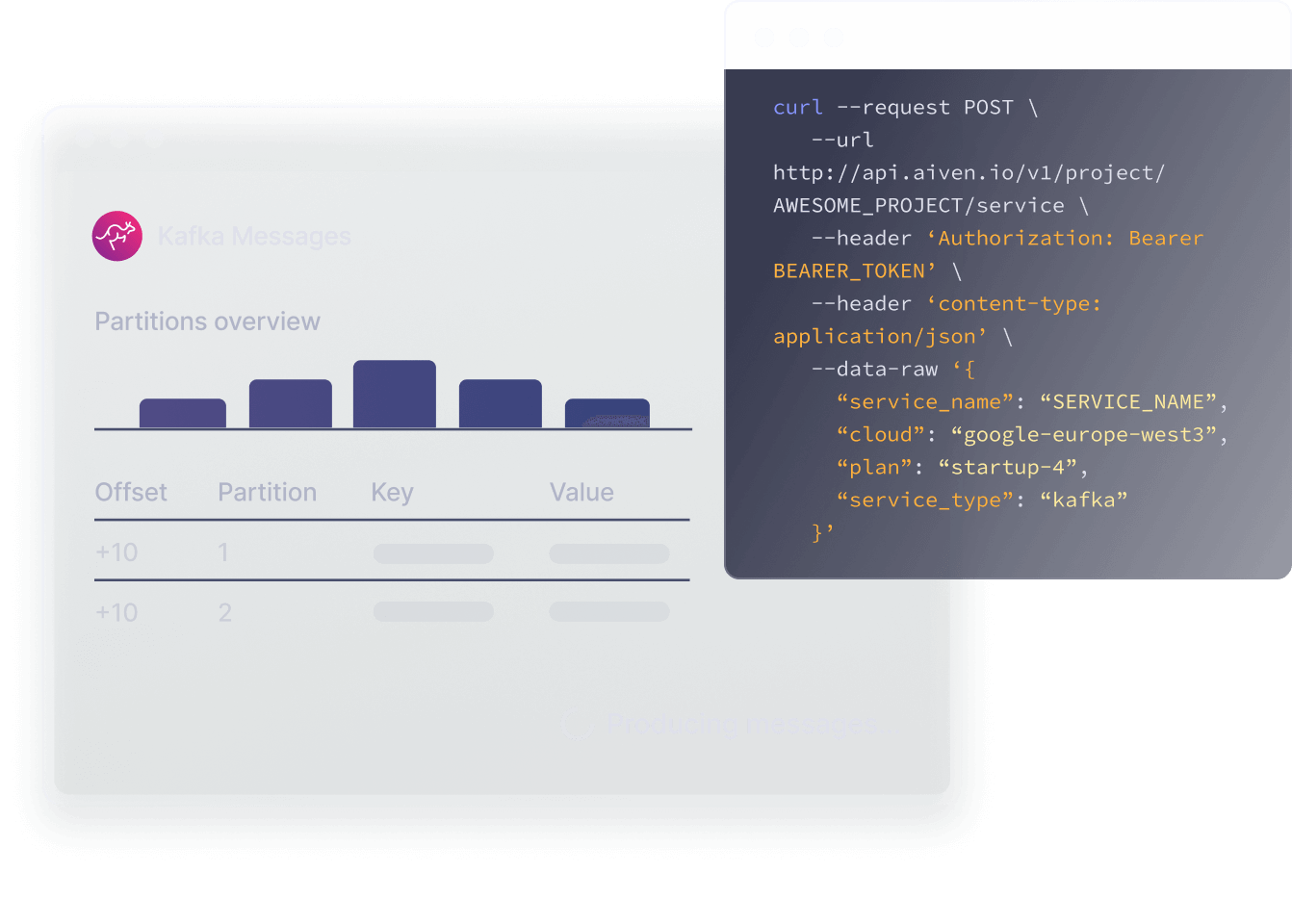

Aiven in action

From zero to first message in 2 minutes

Don't just take our word for it. See how fast you can deploy a fully-managed Kafka cluster, produce your first message, and consume it in real time.

Streaming platform

Streamlined. Governed. Discoverable.

The modern Kafka platform for developers.

Streamlined topic ownership

Enable application teams to request and own their Kafka topics directly, with built-in approval workflows that ensure governance without bottlenecks.

Topic catalog for discovery

Our comprehensive topic catalog makes it easy for teams across your organization to discover and reuse existing data streams, preventing duplication and increasing data reuse.

Quality-first data streams, every time

Our integrated Schema Registry automatically enforces data quality and consistency, preventing breaking changes and simplifying data evolution.

Data flow visualizer

Visually map your data flow across Aiven services and easily expand your pipelines.

Case study

From startup to scale:

Powering mission-critical databases

Connectors

A unified platform for your entire data stack

Connect services with a single click. Move data effortlessly between managed databases and streaming services to build powerful, integrated applications.

Features

Enterprise-grade Kafka, without the complexity

Infinite scalability with tiered storage

From real-time analytics to historical insights, build apps that handle it all. Aiven for Apache Kafka® can scale compute and storage independently with tiered storage.

Built-in Schema Registry with Karapace

Ensure data integrity and prevent breaking changes with our open-source, Karapace-powered Schema Registry. It's fully integrated and managed for you.

Predictive consumer lag monitoring

Get ahead of bottlenecks. Our platform provides insights to help you identify consumer lag issues early, crucial for auto-scaling consumers and ensuring smooth data flow.

Automatic failure recovery

Self-healing clusters with automatic failover ensure your services stay online. We run multi-node clusters across availability zones to guarantee our 99.99% uptime SLA.

Effortless data replication

Use our managed MirrorMaker 2 connector to easily replicate data across clusters for disaster recovery, geo-proximity, or hybrid cloud strategies.

Follower fetcher

Reduce network traffic and lower latency by allowing consumers to read data from replica brokers instead of only the leader broker, improving resource utilization.

Safeguarded with Aiven Platform

- HIPAA compliant

- PCI-DSS compliant

- GDPR & CCPA compliant

- SOC2 compliant

- ISO/IEC series compliant

Use cases

The engine for your real-time apps

Stream directly into data lake

Stream data directly into Iceberg tables and query it instantly, bringing database-like functionality to your data lake.

- Real-time analytics

- Streaming etl

- Data pipelines

Event-driven microservices

Decouple your services and enable resilient, scalable communication. Use Kafka as the central nervous system for your microservices architecture, allowing teams to build and deploy independently.

- Microservices messaging

- Event-driven architecture

Log & metrics aggregation

Create a unified, scalable observability pipeline. Ship logs and metrics from all your systems into a central Kafka cluster, then sink them into Aiven for OpenSearch® for powerful search, analysis, and visualization.

- Log aggregation

- Real-time monitoring

Change Data Capture (CDC)

Stream every INSERT, UPDATE, and DELETE from your operational databases like PostgreSQL and MySQL into Kafka topics using our managed Debezium connector. Unlock the value of your operational data without impacting source systems.

- Change data capture

- Database replication

Backed by open source committers

We don't just run Kafka, we help build it. Our team includes dedicated Apache Kafka® open-source committers, ensuring deep expertise and a direct contribution to its evolution.

Cloud providers

Your data. Your cloud. Your choice

Ease of a fully managed data service while giving you the cost benefits, security and data control of running in your own cloud account.

Your path to ensuring data residency and meeting national compliance requirements for sensitive workloads.

Get true cloud flexibility. Deploy fully managed service exactly where you need them for optimal performance and control.