The Future of Personalization: Building Real-Time Retail Context at Scale

How streaming architecture and vector databases power instant, personalised retail experiences

Maulik Parikh

|RSS FeedStaff Solutions Architect

TL;DR Real-time context engineering with Aiven Inkless is a win-win for retailers saving up to 80% of Kafka total cost of ownership and providing up-to-the minute timelier personalisation and recommendation.

Context engineering is the practice of designing systems that decide what information an AI model sees alongside its prompts. The pattern orchestrates systems that gather relevant information from multiple sources such as conversation history, user data, external documents, available tools and organises it within the model's context window.

Real-time context engineering applies these principles to streaming data, feeding AI agents and LLMs with the most current information available from both physical and digital sources. This pattern addresses two key challenges: data staleness, where agents make decisions based on outdated information, and hallucination, where LLMs generate confident but inaccurate responses in part due to missing context.

Major retailers like Shopify, Walmart, and Target use Apache Kafka to handle their internet-scale workloads like transaction processing, clickstream analytics, and inventory updates. Whilst Kafka is well suited for these workloads it presents a significant drawback: cost.

In a typical Kafka deployment partitions are replicated across availability zones for redundancy but replicating this data sees Kafka users charged fees by cloud providers every time they move data between zones. These fees can be up to 80% of the total cost of ownership of Kafka. This is where Aiven’s implementation of Diskless Kafka, Aiven Inkless, comes in: with Diskless Kafka we can avoid inter-AZ networking cost by directly writing partitions to object storage allowing us to skip replication entirely provided we can accept the tradeoffs of the higher latencies of object storage PUTs (>400ms for Diskless Topics vs <100ms for classic Kafka topics). Context Engineering pipelines can afford these higher latencies and so are well suited to benefit from the cost savings Inkless provides.

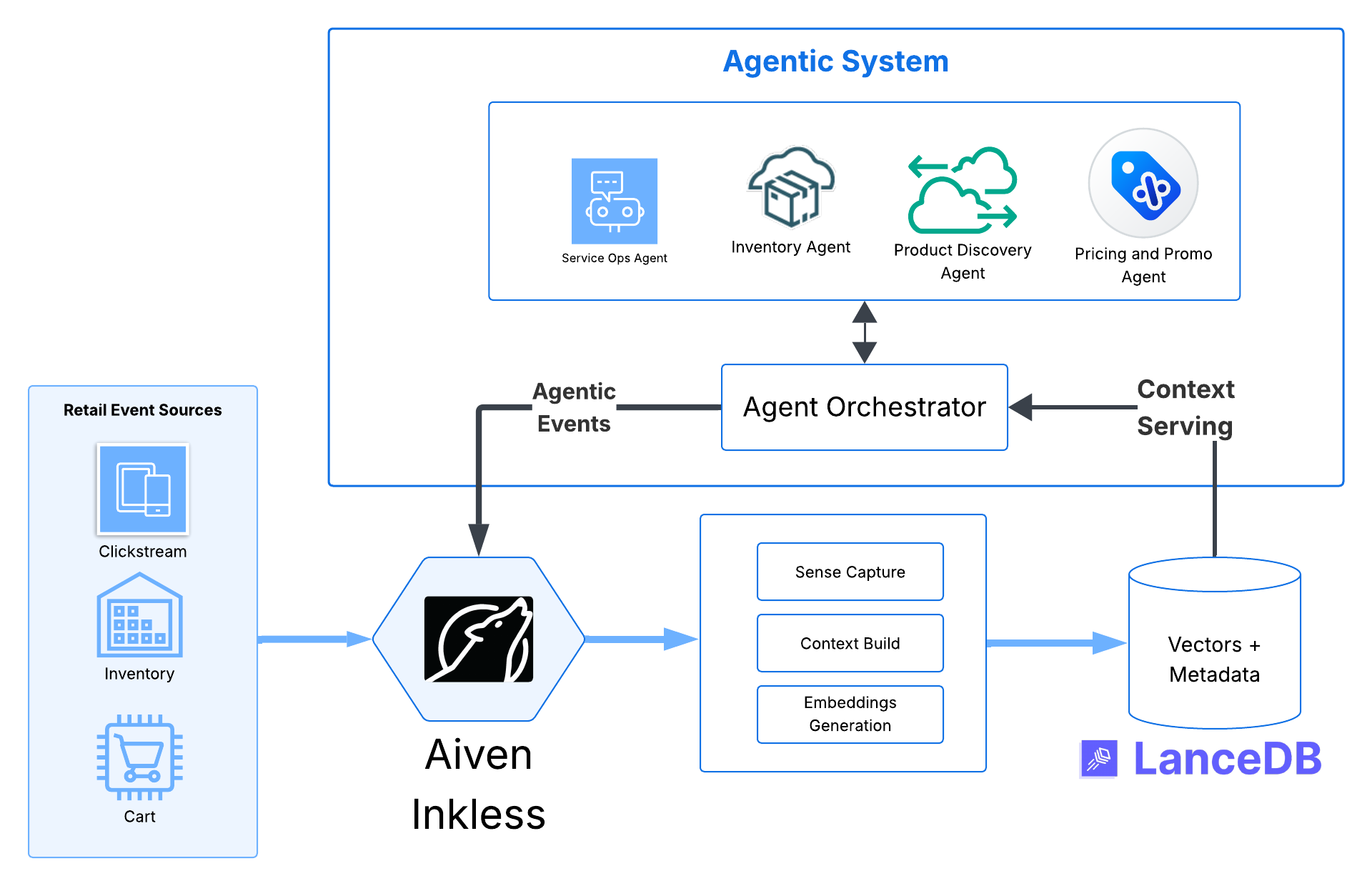

By combining the power of Aiven Inkless and LanceDB, it is now possible to build pipelines that can provide real-time context with sub-second latencies. LanceDB serves as a multi-modal context store whilst Aiven Inkless empowers high performance, at scale, cost-effective Context Engineering pipeline. Let’s take a look at how.

The Workflow: From Stream to Context

1. Ingestion (Aiven Inkless)

Aiven Inkless acts as the cost-effective backbone for capturing raw event data like customer support chats, log files, or sensor data in real-time.

- Why Aiven? It provides a managed, secure (SSL-based) Kafka environment so you don't have to manage the broker infrastructure.

- Data Partitioning: You can partition your context by user ID or session ID to ensure related context stays ordered.

2. Processing & Embedding (The Bridge)

A stream processor (Python-based consumer in this example) continuously consumes messages from Aiven Inkless and performs three tasks:

- Chunking: Breaking down long messages into smaller, semantically meaningful pieces.

- Enrichment: Adding metadata (e.g., timestamps, user roles) to help with filtering later.

- Embedding: Converting text into high-dimensional vectors (e.g., using OpenAI text-embedding-3-small or HuggingFace models).

3. Storage & Indexing (Lance)

LanceDB is unique because it uses a columnar disk-based format (Lance).

- Fast random access: Lance's columnar format delivers up to 100x faster random access than Parquet, enabling rapid data retrieval.

- Efficient vector search: LanceDB's disk-based ANN indexes enable sub-millisecond similarity search at scale.

Empowering Retailers

As we’ve seen by combining the power of Aiven Inkless and LanceDB, it is possible to build pipelines that can provide real-time context with sub-second latencies. Let’s look at some examples of how that can empower retailers to get the most out of their data.

Real-time Hyper-Personalization

Historically recommendation engines often relied on after-the-fact historical insights from hours or days ago. Real-time context engineering with Aiven Inkless and LanceDB allows recommendation engines to know what you are looking at right now.

- Flow: As a customer browses a mobile app, their clickstream and view duration data are streamed into Aiven Inkless.

- Transformation: A microservice consumes these events, generates an embedding (vector) representing the customer’s current intent, and updates their profile in LanceDB.

Result: When the customer hits the "Recommended for You" section, the AI agent queries LanceDB to find products that match the current session's vector, rather than just historical data.

Real-time Sales Promotion Agent

We’ve all seen “flash sale” promotions and dynamic pricing based on demand.

If we reimagine that for a cost-effective avenue for an AI sales agent, what would that be?

- Capture every cart event using Aiven Inkless, process through those events using Kafka Streams, calculate demand and publish the “new price” events back to Diskless topics on Inkless Kafka. .

- For Historical data one can also rely on Iceberg Tables to understand historical pricing activities to make intelligent price forecasts.

- Additionally, one can store product experience multi-modal data (such as images, videos, reels, live reviews etc) into LanceDB.

With all the product-product vectors and product-user vectors stored in LanceDB, one can then build two-tower embeddings based ML models which can generate hot and trending states back into Inkless Kafka for Real-time personalization experience for users.

Result: The AI Sale Agent immediately starts suggesting and personalizing that product to users who are highly price sensitive!

In-Store Video Analytics

It’s 6:00 AM on a Tuesday at a flagship retail location. The morning delivery truck has just arrived with 400 units of a high-end espresso machine that went viral on social media overnight.

The store manager looks at the floor plan on his tablet. The "AI optimizer" (which hasn't had a data refresh since Sunday) insists that Aisle 6 is the perfect spot because it’s "currently empty."

The reality? Yesterday afternoon, a local shipment of heavy cast-iron cookware was diverted to that exact same aisle due to a leak in the warehouse.

Because the store’s "brain" is running on stale data, the systems clash. The espresso machines arrive, there is zero floor space, and the loading dock becomes a gridlocked nightmare of crates, frustrated staff, and safety hazards. Customers walk in at 9:00 AM to a store that looks like a chaotic warehouse rather than a premium shopping experience.

Here's how real-time context engineering solves this:

- In-store cameras generate heatmap data points that can be streamed via gateways into Aiven Inkless.

- The data is then vectorised and stored in LanceDB.

- This data can be correlated with other incoming events to ensure accuracy of inventory and foot traffic data.

Result:

Empower store staff with insights to answer real-time questions such as, are we over-capacity in the home department? What is the best aisle and position for the newly arrived high-end espresso machines?

Conclusion

With AI being ubiquitous in the retail industry but real-time context engineering still being adopted, many retailers are essentially asking their AI to operate in the "dark” forcing them to rely on stale data from hours or days ago. The challenge compounds for the business when considering the additional cost of human-in-the-loop systems with workers manually double-checking a system's claims, something becoming increasingly popular as we see more businesses being held legally liable for the promises of agentic chatbots.

Operational Benefits

-

80% Cost Reduction: Retail generates massive amounts of telemetry. Aiven Inkless's architecture allows you to store months of "just-in-case" data in cheap S3 storage while keeping the "hot" data in memory for immediate use.

-

Zero-ETL AI: You don't need to move data between a database and a vector store. Aiven for Inkless holds the context, telemetry data and consistent in-motion state. LanceDB stores the raw product metadata (price, SKU, description) alongside the vectors, as well as multi-modal product experience data (images, video, promos, reels etc) simplifying the architecture.

-

Consistency: Use LanceDB's Snapshotting to ensure that an AI agent's "context" remains consistent during a checkout flow, even if the underlying inventory data is changing rapidly.

Real-time context engineering is a win-win for retailers. Moving from batch data to streaming for analytics has seen businesses reap the rewards of timelier insights, applying the same approaches to context engineering offers similar opportunities such as better recommendation and personalisation based on customer spending habits.

Get started

Ready to build real-time AI products for your business? Book a demo

Stay updated with Aiven

Subscribe for the latest news and insights on open source, Aiven offerings, and more.