7 challenges that data pipelines must solve

The current data landscape presents many challenges for data pipelines to overcome. Read this post to find out what they are.

John Hammink

|RSS FeedDeveloper Advocate at Aiven

What if you built a data pipeline, but couldn't connect to where your data was stored?

Imagine: you're running an international hotel chain with thermostat data written to flat files in a S3 bucket, and energy consumption data logged to an accounting spreadsheet only accessible by accounts. How could you correlate thermostat and energy consumption data to optimize?

Or, picture this: you're running a stock tracking site, but you can only batch import stock data from the previous day once every 24 hours? Can any trader make accurate, well-timed decisions using your platform?

What if you're a mobile game developer monetizing game levels by tracking progress? Collecting a handful of SDK event code onto your commodity-hardware MySQL server under your desk works great for a few players, but what if your game goes viral?

And what if you suddenly needed to collect and accommodate variable-length events such as when a player advances to the next level, and points are tallied with the player’s name, rank, and position, along with a timestamp?

Previous attempts (and lessons learned)

Not long ago, these scenarios were common. Data from different sources went into separate silos that various stakeholders couldn't access. Data couldn't be viewed, interpreted or analyzed in transit. Data was almost exclusively processed in daily batches as in nowhere near real-time.

Data volume and velocity grew faster than a homespun pipeline was designed to handle. And data ingestion would often fail silently when the incoming events’ schema didn't match the backend’s, resulting in long and painful troubleshoot / restart cycles.

Adding insult to injury, the data formats and fields kept changing with the race to meet ever-changing business needs. The result was predictable:

Stale, inconclusive insights, unmanageable latency and performance bottlenecks, undetected data import failures, and wasted time! You need to get your data where you want it so you have singular, canonical stores for analytics.

Analytics data was typically stored on-premise, in ACID-compliant databases on commodity hardware. This worked until you needed to scale your hardware and the available analytics and visualization tools no longer gave analysts what they needed.

What's more, analysts were tied up with infrastructure maintenance, and growth-related chores like sharding and replication...this was without handling periodic software crashes and hardware failures.

Along with that homespun storage and management tools rarely get the same attention as the product, and it's difficult to justify the investment. However, whenever technical debt goes unaddressed, system stakeholders eventually pay the price.

Now, how you host and store your data is crucially important for reaching your data analytic goals: managed cloud hosting and storage can free up analysts from disaster cleanup, as well as the repetitive work of maintenance, sharding and replication.

The Seven Challenges

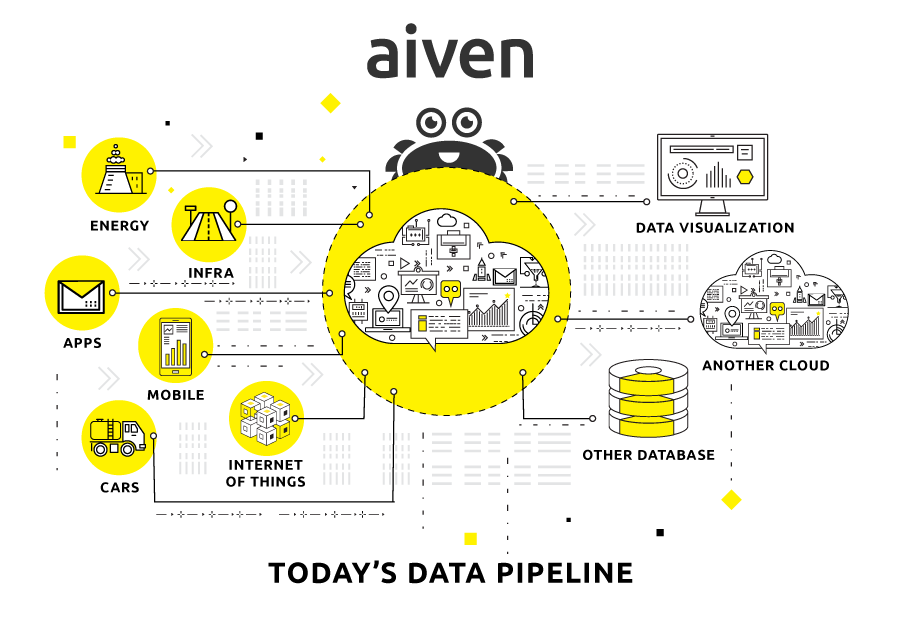

A data pipeline is any set of automated workflows that extract data from multiple sources. Most agree that a data pipeline should include connection support, elasticity, schema flexibility, support for data mobility, transformation and visualization.

Modern data pipelines need to accomplish at least two things:

- Define what, where, and how it's collected

- Automatically extract, transform, combine, validate and load it for further analysis and visualization.

1. Getting your data where you want it

To get a complete picture from your data, you need to get it where you want it. What sense would it make if you imported sensor data, but isolated sales and marketing data? You could correlate and map trends from each data pool separately, but couldn’t draw insights from combined data.

You'll want your tools to support connections to as many data stores and formats as possible, including unstructured data.

The challenge is finding out what data you need and how you’ll combine, transform, and ingest it into your system. Not to mention what the best data store and format is to store the data in.

2. Hosting (and storing) your data

You'll need to be able to host your data, and have it live in a known format. You could take on the initial outlay, and maintenance costs and staff for an on-prem solution. However, if you decide to self-host, consider the following questions:

- Which operating system should I run?

- What are my memory / disk requirements?

- What storage is most appropriate?

- Do I want to build redundancy into my system?

- What performance considerations should my system have?

- Are there latency requirements?

- Am I batching historical analytical data, or do I need to access data in real time?

Or, you could use a managed service with fixed costs. Self-hosting is a variable expense, but ultimately more expensive than a managed service. Aiven provides fully-hosted and managed cloud databases and messaging services on all major cloud providers across all regions.

3. Flexing your data

Enterprises often build pipelines around extract, transform, load (ETL) processes that pose unique problems. A defect in one step of an ETL process can cascade into hours of intervention, affecting data quality, destroying consumer confidence, and making maintenance daunting.

They are also costly, static and only suited to particular data types, schemas, data sources and stores. These make them less desirable for analytics data, where flexible schemas are needed because a data source or event schema can change over time.

You need to be able to flex your data today: analytics data pipes must be elastic enough to accomodate all kinds of data with few constraints on schema or type.

ACID (atomic, consistent, isolated and durable) databases are the best choice for transactional data with a static schema. In the past, ACID databases were in on-premise, commodity hardware and used standard ETL tools to translate between data stores.

Analytics data is different; it’s analyzed in mass to understand larger trends. As your source applications and systems evolve, you’ll need to accommodate high-velocity, variable-length events: a schemaless model. And, analytics data is more likely to require the schema flexibility of a wide column store or NoSQL offering.

Cassandra is schema-optional and column-oriented. As a wide column store, you don’t need to model all of the required columns initially; each row doesn’t need the same set of columns. InfluxDB is a schemaless time-series database, which lets you add new fields at any time.

A wide column store like Cassandra have been proven to be popular choices for analytics data, and Aiven offers it.

4. Scaling with your data

Analysts sometimes still import data in isolated, all or nothing atomic batches. But today’s data volume and velocity, and the need for real-time insights render this approach ineffective. Analysts must be able to automatically scale their data storage.

You may be getting your application, enterprise, or infrastructure analytics data from one device, system, or set of sensors today, but may have a million tomorrow. How do you manage an ever-growing velocity and volume of data?

You’ll be limited by your on-prem hardware and data store, rendering sharding and replication necessary. But these are complex and require hours of troubleshooting and rework when they go wrong. The best solution is a managed system that automatically scale as your data grows.

5. Moving your data around

Depending on how your data is consumed, you'll need to get it from one place to another. Many enterprises run batch jobs nightly to take advantage of non-peak hours compute resources. Therefore, the picture you see from your data is from yesterday, making real-time decisions impossible.

Enter the message queue. Earlier, developers moved data around by deploying custom data-transmission protocols. We've seen JMS, (limited to transmission between java-language specific components), and RabbitMQ, with the flexible and configurable publish and subscribe capabilities of AMQP.

For large-scale data ingestion, Apache Kafka tends to be preferred among pub-sub messaging architectures because it partitions data so that producers, brokers, and consumers can scale incrementally as load and throughput expand. Aiven Kafka lets you deploy it in minutes.

6. Mashing up and transforming your data

Companies also focused on transactional data from production databases and user engagement data from SDKs and generated events. Today, there are more data sources than ever: website tracking, cloud-stored flat files, CRM, other databases, eCommerce, marketing automation, ERP, IoT, other large datasets via REST APIs, and mobile apps, etc. To mash up and transform data means getting competitive insights on all of the data.

Different databases are better suited for different things.

Mentioned previously, Cassandra is good as a high-availability, distributed data store, and InfluxDB is great for time-series data. But, you may need a key-value or traditional SQL store, or full-text search on unstructured data.

Redis is an in-memory, super fast, NoSQL key-value database that is also used as a cache and message broker because of its high performance. Aiven for Caching provides automatic setup and maintenance, with one-click provisioning.

PostgreSQL is the go-to open-source object-relational DBMS for companies ranging from manufacturing to IoT. Aiven leverages its expertise to provide Aiven for PostgreSQL, the fastest, high-availability Postgres of any provider.

Need to search and index vast quantities of unstructured data? OpenSearch is a distributed document and full-text indexing service that supports complex data analytics in real-time. Aiven for OpenSearch as a service has the longest reach of all with five major clouds.

7. Visualizing everything

Finally, data doesn't necessarily come out the other end of the pipeline in a way that's humanly readable. You need to be able to visualize everything. Data needs to be available in a form that could be further queried and analyzed, and eventually visualized.

Trends and correlations unseen in plain data can often best emerge when visualized.

Grafana is an open-source metric, analytics visualization, and alerting suite. Aiven Grafana helps you gain new insights by enabling dashboards from multiple data sources simultaneously.

Wrapping up

A data pipeline is any set of processes designed for two things: to define what data to collect, where and how; and to extract, transform, combine, validate, and load the data for further analysis and visualization.

There are many unique ways to go about this, so it's best to look at all available options before you start to build. To get an idea of the components used to build modern data pipelines, read our post on the future of data pipelines.

Next steps

If you're not using Aiven services yet, go ahead and sign up now for your free trial at https://console.aiven.io/signup!

Not using Aiven services yet? Sign up now for your free trial at https://console.aiven.io/signup!

In the meantime, make sure you follow our changelog and blog RSS feeds or our LinkedIn and Twitter accounts to stay up-to-date with product and feature-related news.

Adeo runs hundreds of PostgreSQL databases with Aiven

Read case studyStay updated with Aiven

Subscribe for the latest news and insights on open source, Aiven offerings, and more.