Aiven at PGDataDay + PGDay Chicago 2025

Jay Miller represented Aiven at PGDay Chicago 2025. Dive into their recap of the event and key takeaways from many of the talks.

Jay Miller

|RSS FeedJay is a Staff Developer Advocate at Aiven. Jay has served as a keynote speaker and an avid member of the Python Community. When away from the keyboard, Jay can often be found cheering on their favorite baseball team.

Aiven was proud to sponsor PGDay Chicago. I was fortunate to attend and speak at the event and participate in the student-friendly PGDataDay by Prairie Postgres the day before. This was my first PostgreSQL Community event, and I was pleasantly surprised by the warm environment and the preparedness for the DataDay event.

The disappointing news from this conference is that talks were not recorded this year. However, I was able to compile my takeaways from the event and I’m happy to share some thoughts with you.

Who is this event for?

The inclusion of a PGDataDay focused on students made this event great for anyone involved in Database Administration at all levels, including beginners.

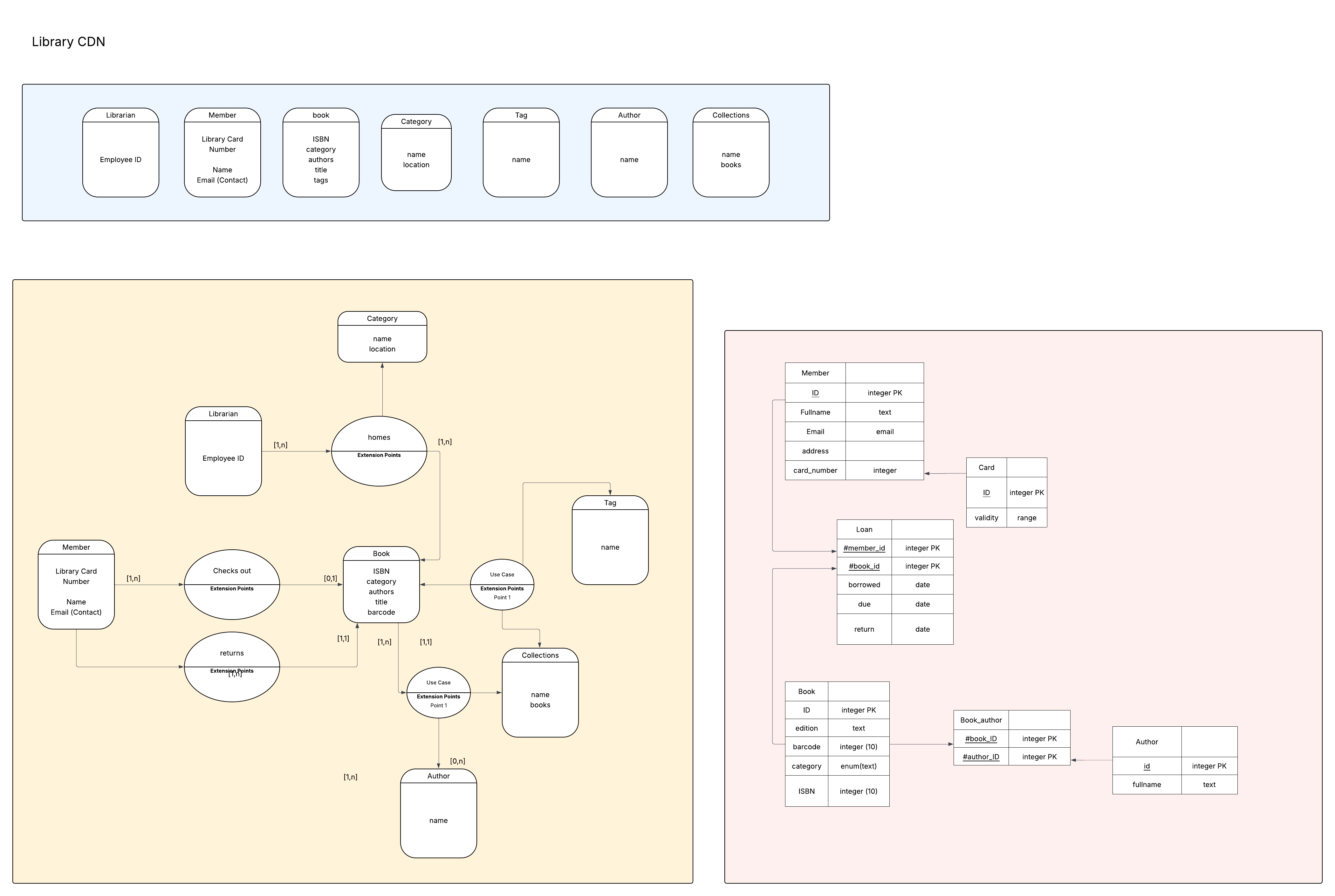

For those working in the industry, there were many talks that shared resources and concepts useful for getting the most from your PostgreSQL databases. Those hoping to take their first steps were given two wonderful primers in Lætitia Avrot’s and Shaun Thomas’ workshops. Lætitia transformed Data Modeling from a science to “an art form” with the Merise data modeling system, and Shaun provided a jam-packed session covering a massive range of topics, complete with tools and notes.

I would also mention this is a great conference to attend to meet members of the community in the Chicago tech space and surrounding areas. Attendees were mostly professionals in space and were eager to share their experiences in the industry.

What were some key takeaways

This conference was interesting as there didn’t feel like there was one singular topic. Talks ranged from a variety of ideas around performance optimization to using postgres in ways you may not think about out the gate. Here are some big ideas that stood out in a few talks.

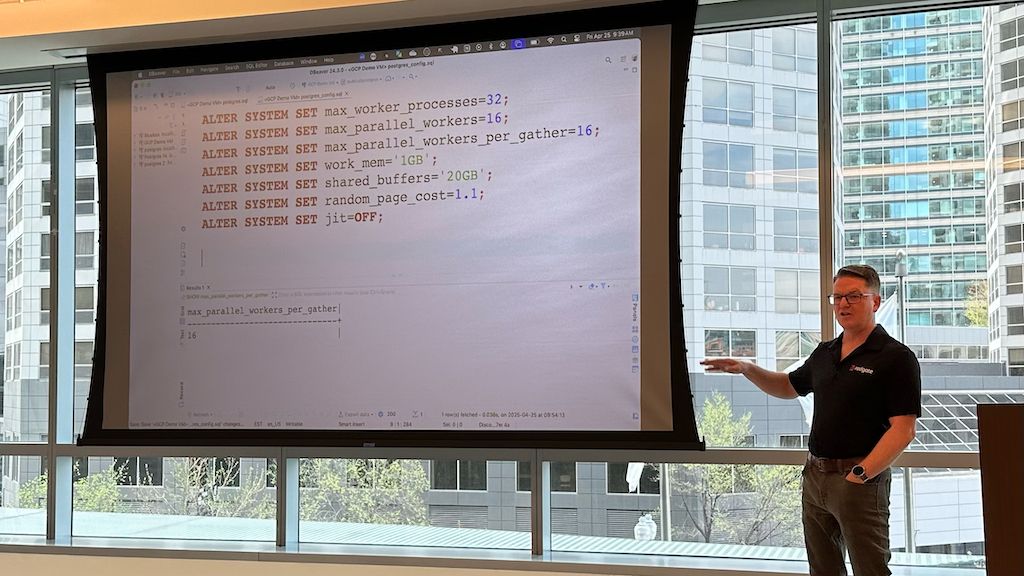

Postgres is plenty fast enough…when you know what to look for

My day started with Ryan Booz showing how with the right extensions and tools, like DuckDB, you could ingest a billion rows in a few seconds. There was also a conversation about which tools to choose based on your data’s use-case. For instance for fast processing of data that may be at the start of your journey, consider making an UNLOGGED table and bump up your max_parallel_workers, while not for everyone, can be used at the right moments for amazing performance gains on ingestion. If you’re building analytical workloads, you should make sure that you’re using the appropriate timeseries or OLAP services. Many of these tools are designed with fast ingestion in mind and can turn that single process copy into a multi-processed beast.

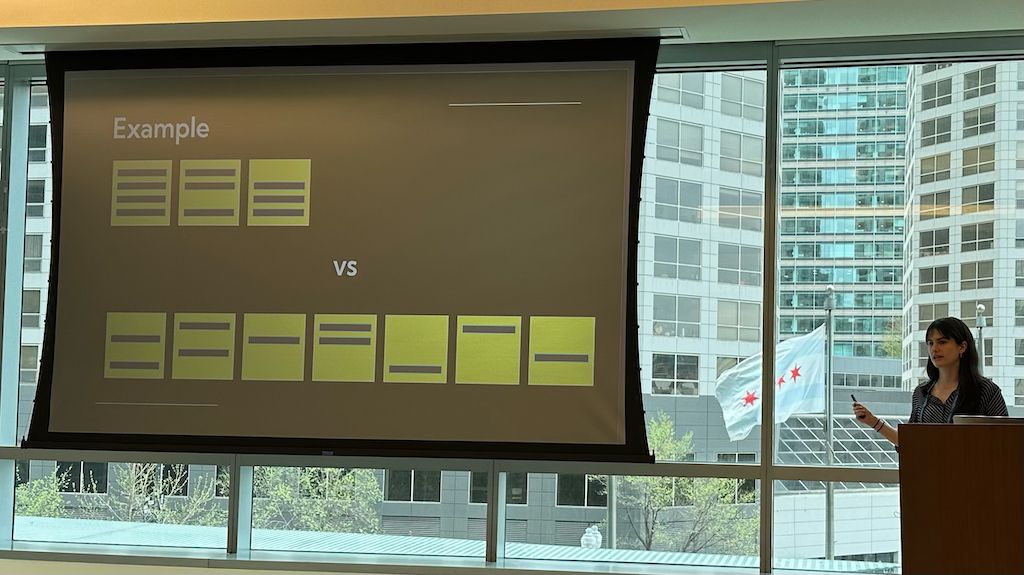

Of course there are also the slowdowns that seem to happen over time. This is where conversation around your maintenance configurations needed to happen. The DBA in a box had a wealth of settings, but also Chelsea Dole’s talk Table Bloat: Managing your Tuple Graveyard gave an important reminder that prevention of problems is almost always better than trying to fix them later. She taught us about pgstattuple, pg_class.reltuples, and many settings that we could tweak if we noticed the amount of dead tuples growing in our database.

These preventative resources were a great add to some of the other tools like query and index optimizers and many tips provided in our Optimization Guides for PostgreSQL.

PostgreSQL can be your everything tool

A common phrase is “start with PostgreSQL”. This is often because the flexibility of postgres as an open source database means that a wealth of solutions are available with PG as the base.

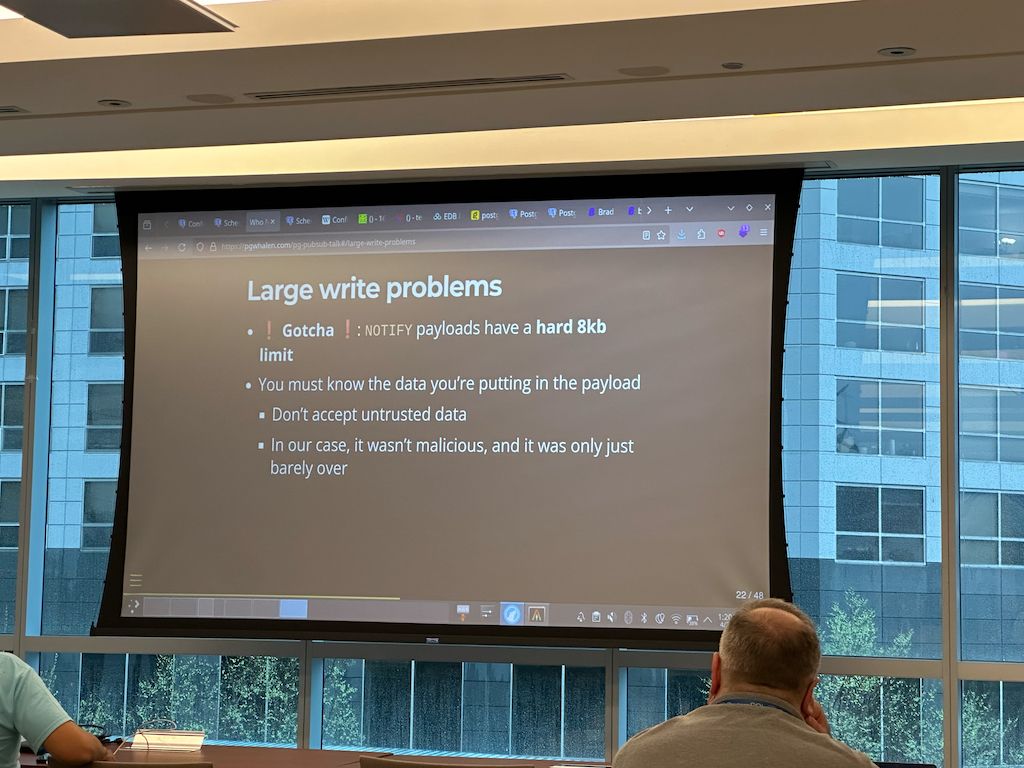

John Whalen kicked this topic off with showing us PubSub patterns that will have postgres looking similar to Kafka. pg_notify can do a wonderful job acting as a broker, letting other services know of new entries and more. We personally think Kafka will become an easier choice soon with KIP1150, but if your pipeline currently starts with PostgreSQL, you can turn many of those scheduled services into PubSub with few commands.

Aiven also brought something to the party as I took to the stage to share how moving my data from Aiven for OpenSearch into Aiven for PostgreSQL allowed me to leverage PG17’s new JSON_TABLE command and get insights that inspired me to increase the amount and variety of content that I ingested for my RAG applications. We also investigated how perhaps instead of relying on full text search or vector search for discovery, why not choose both? Implementing Hybrid search in our RAG applications improved accuracy of queries which lead to better AI responses.

I also think it’s good to look at using the right tool for the job. I would consider if a PG-based system like Aiven for AlloyDB Omni may simplify the process.

I would expect to hear more about this journey either at future postgres events or perhaps at an Aiven Workshop.

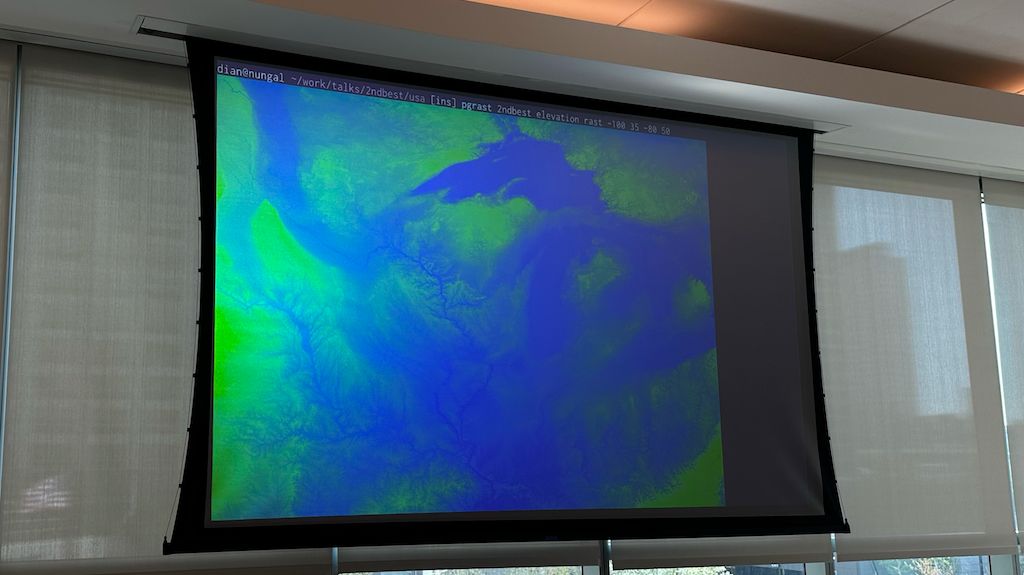

Lastly, Dian Fay showed us alternative ways to visualize PostgreSQL data. No more need for running a query inside of a python script when you can use tools that can help you create beautiful tables, charts, and even raster images directly in your terminal with tools like pgrast and others.

There is so much to look forward to

The good news is that there are many active meetups you can check out where a lot of these and other topics can be heard. There are also a wealth of postgres conferences around the world in places, like France, Germany, and later this year in New York City.

If you were on the fence about attending a Postgres conference, know that my first event was welcoming, warm (yes even in the Windy City), and full of amazing organizers, speakers, and attendees that I can’t wait to see at the next conference!

Stay updated with Aiven

Subscribe for the latest news and insights on open source, Aiven offerings, and more.