Using PostgreSQL Anonymizer to safely share data with LLMs

Learn how PostgreSQL Anonymizer helps safely share data with LLMs by masking PII, using both static and dynamic masking strategies for secure development.

Jay Miller

|RSS FeedJay is a Staff Developer Advocate at Aiven. Jay has served as a keynote speaker and an avid member of the Python Community. When away from the keyboard, Jay can often be found cheering on their favorite baseball team.

Using PostgreSQL Anonymizer to safely share data with LLMs

The promise of large language models (LLMs) brings new performance gains to development teams, sharing information and context to your team. How is this information stored?

AI companies cache and persist information. This means queries and their results are stored in a database somewhere. I was able to retrieve prompts across multiple devices meaning that the information was not held to my local machine. AI companies talk about how data is secured, but as we saw with DeepSeek, AI companies are not immune to database setup mistakes leading to data leaks. There is also the issue of retained history and context that more and more AI tools are serving as a feature. That information can be retrieved by a bad actor using zero-click exploits. So as we speed up our own development using LLMs, we need to ensure that we do it in a way that protects the information of our users.

Meet PostgreSQL Anonymizer, the data masking extension now available by default with Aiven for PostgreSQL®. PostgreSQL Anonymizer lets you declare and mask columns with personal identifiable information (PII).

PostgreSQL Anonymizer aids the principle of least privilege (POLP), allowing for even finer access to information. LLMs and other users only interact with anonymized information, which minimizes exposure of sensitive data. Everything that we’re explaining about how LLMs will query masked data applies to any user under the same roles.

This post will show you two strategies you can use to work with anonymized data when using LLMs and MCP.

Our Data Model

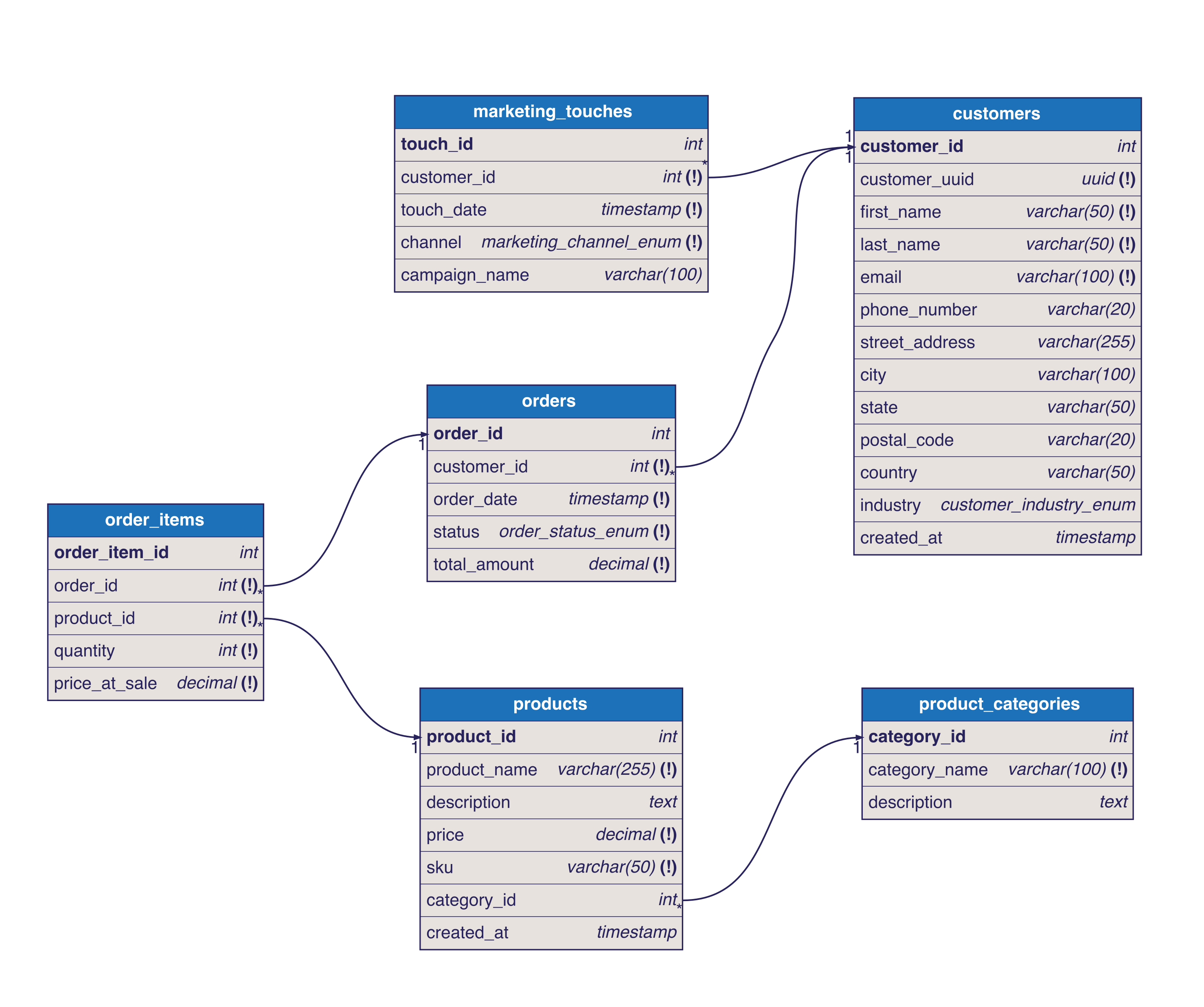

Here is an example B2B Ecommerce Platform schema. All of the data is synthetic and made up.

You're asked to help with a marketing campaign that segments customers within designated service regions and industries and finds sales trends across those segments. Our customer schema is full of personal information that an ecommerce company would need to fulfill orders. Using this information is critical to the request but creates opportunities for data breaches and data privacy violations.

⚠️ Don't put your company at risk by sharing PII

This is a reminder that models by default are non-deterministic. This means a model with access to your database may collect information in different and unexpected ways. Unfortunately, Model Context Protocol (MCP) does not help with this. Many of the MCP servers I've tested often start with the widest ways to search, starting with learning the schema and all the tables and then retrieving a sample of data to verify the format of the content.

Before we talk more about PostgreSQL Anonymizer, make sure you protect your customer's information by:

- consider principle of least privilege (POLP) and limit all users access to only views and tables they absolutely need.

- consider column-level security and row-level security where applicable

- consider hashing and salting sensitive data

What happens when we ask AI with unfettered access

Let's see what happens when you give the LLM read access to the entire database. Using Claude 4.0 Pro using crystaldba/postgres-mcp in restricted mode (meaning is not able to write to the database... Only READ) I got a wonderful response that identified primary and secondary segmentation criteria as well as customer value metrics that should be tracked. When I asked it to compare its plan with the current database schema, it was able to pull the data and create a series of queries that would connect with our database and view the entire database schema.

Loading code...

As I mentioned earlier, this shows that LLMs will consider pathways outside of what is expected and will query tables that you may not have expected.

A few lines later in the response we get a request for a lot of PII. The actual query isn't important but as you look at it take a look at the amount of PII that it attempts to grab.

Loading code...

This isn't a problem, it's necessary. This is the foundation of the task that we're trying to accomplish. The problem is that if this request had permission to run, it would have created this view which if called would display all of the customer information.

All this to say, if it's in the memory or data chain of an LLM, you have no control over how it can be retrieved later. The best approach is to never upload it to begin with.

Fast Fix - Fork, Transform and Share

PostgreSQL Anonymizer has the ability to permanently randomize data through static masking. Depending on the size of the database (and its contents), we can fork the database and anonymize our PII before passing it to the LLM.

Let's imagine if our database had the following customer information

Loading code...

**Step 1:**Let's fork our existing database. In the Aiven console you can go to your service overview page for the PG instance that you want to work with. In the Backups and forking section, select the three dots and then fork service.

Another way to do this is with the Aiven CLI.

Loading code...

Step 2: We need to connect to the forked database as an admin and enable the PostgreSQL Anonymizer service.

Connect using your favorite PostgreSQL client (if you have psql you can use the avn cli again)

Loading code...

::NOTE: STATIC ANONYMIZATION IS A PERMANENT CHANGE. DO NOT RUN THE FOLLOWING ON YOUR PRODUCTION DATABASE

Loading code...

This will copy the datasets used for creating anonymized services.

Step 3: Create SECURITY LABELS for the columns that should be anonymized.

Loading code...

Those functions anon.dummy_first_name() and the others populate from those datasets that we loaded with anon.init(). There are several common patterns that you can choose from. You can see the full list of functions in the documentation.

Step 4: Apply the changes. We could apply this at the table level with

Loading code...

Or we can apply all the changes we've made with

Loading code...

Now when we run that same query from that we did at the beginning we would get something like.

Loading code...

If we do this to all our data we can then give this information to the LLM and trust that no customer data will be released.

Do we need to make another database?

No, we don’t. PostgreSQL Anonymizer has dynamic masking. This masks data for specified certain roles but will not permanently change the rows. This means when your LLM makes a query the results are anonymized.

Let’s apply this to our marketing project.

Step 1: Create a view that removes some of the PII that you want the LLM doesn’t need

Loading code...

This reduces the amount of masking needed. With this running with every query we want to optimize the process as much as possible.

Step 2: Apply the policy to the role that we made for the LLM.

Loading code...

Now when the model uses MCP to learn more about the database it will only find the tables and views that it has access to.

This is useful but limits the LLM's ability to learn and work outside the lines. There is still the customer email which is PII. That's intentionally left in since this an email marketing campaign and we’d want to use it later with privileged accounts.

::NOTE: You should still not give AI access to any production databases. Use with caution

Step 3: Start the extension and the dynamic masking engine.

Loading code...

The SET anon.transparent_dynamic_masking TO true; starts the dynamic masking engine. Now as before we create our security labels. And apply the label to the user account.

Loading code...

Notice we applied the mask to the view and not the original column. If you apply this to the original column, the data will not change in the referenced view. You have to apply the mask to what will be called in the query.

Now when that role (the one we'll give to LLMs and MCP servers) starts its exploration. Interesting enough it learned about the relationships to the customers table and got confused and assumed that we hadn’t created the table yet (HOW DARE YOU NOT GIVE IT ACCESS 😂). After I mentioned the view it immediately began working on building customer segmentation by trying to get all the information.

Loading code...

It then proceeded to generate a campaign highlighting top performing Segments and the states they were in. Here’s a sample.

Loading code...

When I ask it more information about that customer at ID:0

Loading code...

Use PostgreSQL Anonymizer with your LLMs and your employees

This introduction to PostgreSQL Anonymizer shows how you can secure PII from LLMs. These tips also work to help you follow the principle of least privilege. There are other use cases for the anonymizer such as anonymizing data to train your own models, as well as other masking strategies that would dump masked data to other formats and data types. Lastly, there are many different masking functions for all kinds of different information. You can learn more about these at the PostgreSQL Anonymizer Documentation

Learn more about this extension and the 50+ other extensions available in the Aiven for PostgreSQL today.

Stay updated with Aiven

Subscribe for the latest news and insights on open source, Aiven offerings, and more.